Intel is set to ship their new Nervana Neural Network processors by the end of 2017

Intel is set to ship their new Nervana Neural Network processors by the end of 2017

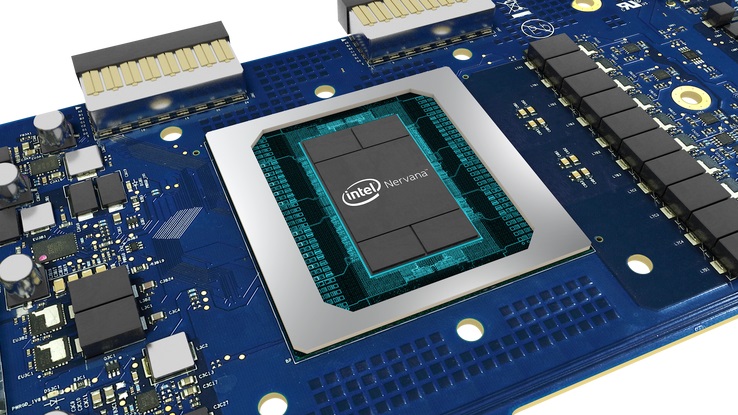

Intel’s Nervana NNP is a new architecture that is designed for deep learning, offering the flexibility to work with all deep learning primitives in an efficient way, freeing developers from the restrictions of existing hardware, which was not developed with AI in mind.Â

These new chips are based on technology that was originally created by Nervana Systems, a Deep Learning Hardware startup that was purchased by Intel in August 2016 for $350 million. Since this purchase, Intel has been aiming to improve their AI/Deep Learning Training performance by 100X by 2020, which Intel says they are currently on target to exceed.Â

Â

Â

One of the interesting features of Intel’s new Nirvana NNP chip is the ability for software to manage the hardware’s on-chip cache hierarchy, which is a feature that can be used to achieve higher levels of utilisation and therefore increase the performance of the chip.Â

Intel has also created their Nervana NNP processors with scalability and numerical parallelism in mind, creating a bi-directional data transfer link that used a proprietary numerical format called Flexpoint to achieve higher levels of throughput.Â

Sadly these new Nervana NNP processors were not released with any benchmarking numbers, though Intel does plan to release these processors by the end of 2017, so further technical insights should be available soon. From the looks of Intel’s rendered images, these new AI chips are making use of HBM2 memory (likely 32GB, 4x8GB), though Intel has not gone into any specifics about the chip’s design at this time.Â

It looks like Intel plans on building a larger product line based on their Nervana NNP chip designs, with many expecting a future Xeon processor called “Knights Landing” in the future.Â

Â

You can join the discussion on Intel’s Nervana Neural Network processors on the OC3D Forums.Â

Intel is set to ship their new Nervana Neural Network processors by the end of 2017

Intel’s Nervana NNP is a new architecture that is designed for deep learning, offering the flexibility to work with all deep learning primitives in an efficient way, freeing developers from the restrictions of existing hardware, which was not developed with AI in mind.Â

These new chips are based on technology that was originally created by Nervana Systems, a Deep Learning Hardware startup that was purchased by Intel in August 2016 for $350 million. Since this purchase, Intel has been aiming to improve their AI/Deep Learning Training performance by 100X by 2020, which Intel says they are currently on target to exceed.Â

Â

Â

One of the interesting features of Intel’s new Nirvana NNP chip is the ability for software to manage the hardware’s on-chip cache hierarchy, which is a feature that can be used to achieve higher levels of utilisation and therefore increase the performance of the chip.Â

Intel has also created their Nervana NNP processors with scalability and numerical parallelism in mind, creating a bi-directional data transfer link that used a proprietary numerical format called Flexpoint to achieve higher levels of throughput.Â

Sadly these new Nervana NNP processors were not released with any benchmarking numbers, though Intel does plan to release these processors by the end of 2017, so further technical insights should be available soon. From the looks of Intel’s rendered images, these new AI chips are making use of HBM2 memory (likely 32GB, 4x8GB), though Intel has not gone into any specifics about the chip’s design at this time.Â

It looks like Intel plans on building a larger product line based on their Nervana NNP chip designs, with many expecting a future Xeon processor called “Knights Landing” in the future.Â

Â

You can join the discussion on Intel’s Nervana Neural Network processors on the OC3D Forums.Â