Nvidia announces its 80GB A100 – A big memory upgrade for Ampere

Nvidia announces its 80GB A100 – A big memory upgrade for Ampere

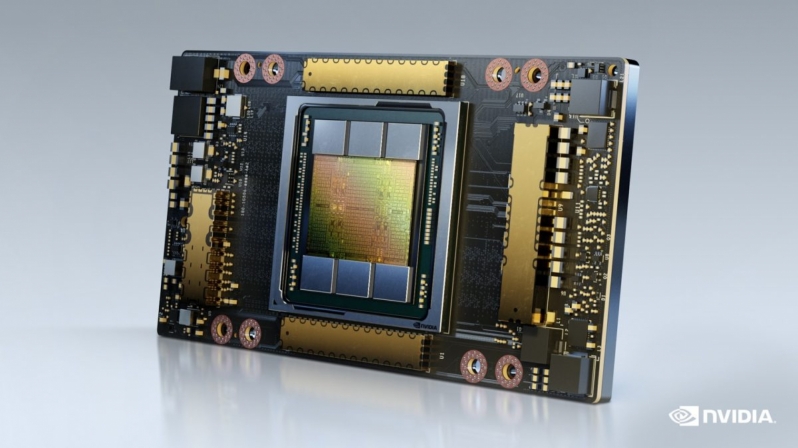

Nvidia’s new Ampere A100 accelerator will feature a whopping 80GB of HBM2e memory and 2 TB/s of memory bandwidth, doubling the DRAM capacity of the A100 while also offering users a 25% increase in the accelerator’s total memory bandwidth. These changes will allow these new A100 models to handle larger datasets and move data to and from its memory at a faster rate.Â

When compared to Nvidia’s older A100 chip, this new 80GB model offer users the same specifications, boasting 6912 FP32 CUDA cores, NVLINK 3 support and the same 400W TDP as before.Â

Like its predecessor, the A100 continues to use five out of the six HBM2E memory chips that the accelerator has on its package. This means that it is still possible for Nvidia to release higher capacity versions of its A100 silicon. If all six possible HBM2E modules were used on these new chips, Nvidia would gain a 20% increase in memory bandwidth and an extra 16GB of memory capacity. Only time will tell if Nvidia will ever during a full-fat A100 accelerator to market.Â

The additional memory capacity of these new accelerators will give developers more freedom when using Nvidia’s A100 silicon, allowing users to increase the complexity of their data without relying on slower off-chip memory. Â

Within their latest DGX systems, Nvidia can offer users up to 640GB of HBM2E capacity across eight new A100 accelerators. Nvidia’s DGX A100 640GB system also contains double the system memory and storage capacity as their its predecessor, giving their buyers a little more of everything.Â

While AMD has become a lot more competitive with Nvidia within the HPC market thanks to their Instinct MI100, they still have a long way to go before they can compete with Nvidia’s offerings. Engineers and scientists have been using Nvidia optimised software for years, and AMD’s MI100 only offers users 32GB of onboard memory. For those who use larger datasets, Nvidia’s A100 80GB is an offering that AMD can’t compete with.Â

You can join the discussion on Nvidia’s 80GB A100 accelerator on the OC3D Forums.Â