Nvidia showcases AI Slow Motion Video Conversion Tech

Nvidia showcases AI Slow Motion Video Conversion Tech

Nvidia’s research arm has created a deep learning system which can create high-quality slow-motion videos using standard 30FPS footage, creating a reasonably accurate approximation of real-like slow-motion video without the need for specialised equipment.Â

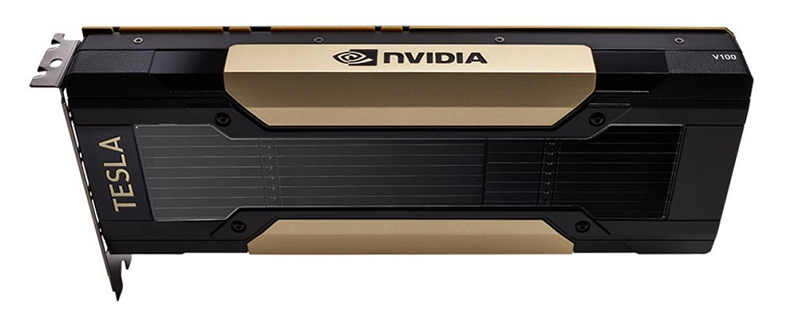

Using their AI-focused Tesla V100 GPUs and a  cuDNN-accelerated PyTorch deep learning framework, Nvidia trained their system to predict extra frames for video content, allowing low framerate footage to be given extra fluidity and for slow-motion footage to be made even slower, up to four times slower.Â

This technology can create multiple intermediate frames, allowing footage to become several times more fluid when played back side-by-side. Nvidia has stated that they used 11,000 240FPS videos to train their AI, allowing it to create approximate extra detail in low framerate footage for extra fluidity. Â

Â

While high-framerate cameras are becoming increasingly popular in modern smartphones, it is undeniable that such equipment is both power and memory intensive and comes only on high-cost devices, making this AI-driven technique extremely useful for post-recording high-framerate enhancements.Â

Nvidia’s technique seems to be both faster and more accurate than existing slow-motion interpolation techniques, with Nvidia being able to offer up to seven intermediary frames to create a potential 8x slow-motion effect while retaining the same levels of fluidity as before.Â

You can join the discussion on Nvidia’s AI-driven slow-motion video conversion technology on the OC3D Forums.Â