Nvidia reveals Grace, their first ARM-based CPU for AI and High Performance Computing

Nvidia reveals Grace, their first ARM-based CPU for AI and High-Performance Computing

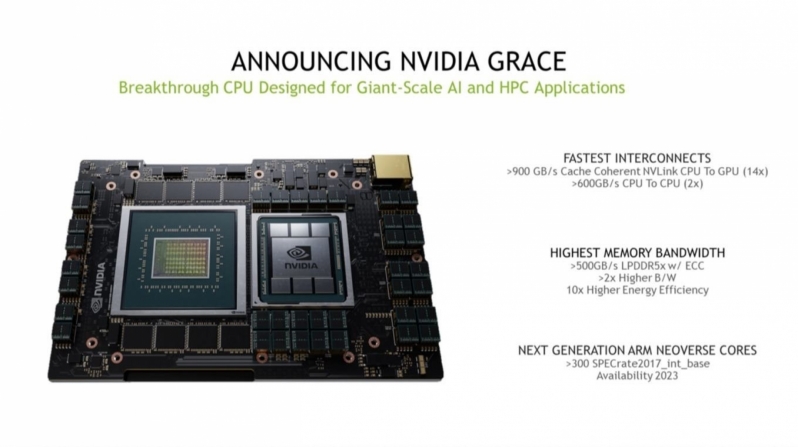

Today, at GTC 2021, Nvidia revealed their Grace processor, a custom ARM-based processor that will launch in 2023. Nvidia’s not waiting for its ARM acquisition to be finalised; they are venturing into the CPU market right now. With a SPECrate2017_int_base score of over 300, Nvidia’s ARM-based processor will be no slouch, and this is just the start of the company’s CPU ambitions.Â

Nvidia’s Grace processor is all about interconnect speeds. Nvidia believes that bandwidth is a critical aspect of future computing workloads, and it is an area where Intel and AMD are not keeping up with Nvidia’s hardware requirements. Through NVLink, Nvidia Grace processors will provide users faster CPU-to-GPU and CPU-to-CPU bandwidth levels, enabling the acceleration or more workloads and the ability for their GPU clusters to accelerate ever-larger datasets.Â

Let’s be clear, Nvidia’s Grace processor is designed for Nvidia’s DGX ecosystem, and that’s where these processors will shine brightest.Â

Â Â

Â

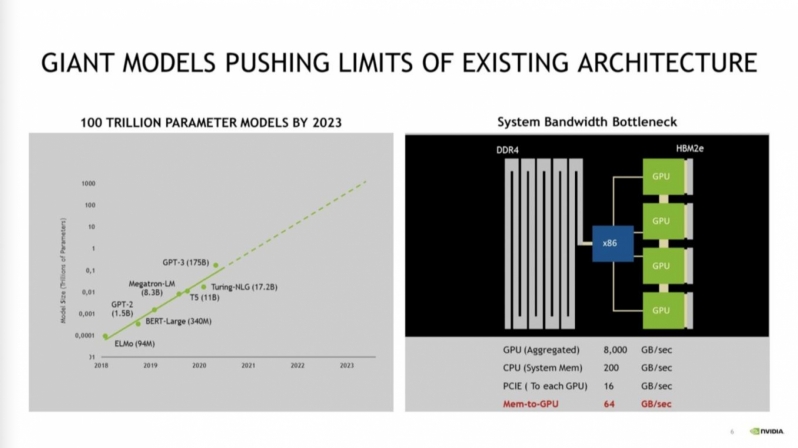

Nvidia’s DGX systems need to address increasingly large datasets, requiring fast access to a large amount of memory. Sadly, Nvidia’s current set of DGX systems are bottlenecked by x86 processors and relatively slow PCIe connections. Nvidia’s NVLink is faster, and while PCIe 5.0 and PCIe 6.0 are on the horizon, Nvidia’s NVLink technology is available now.Â

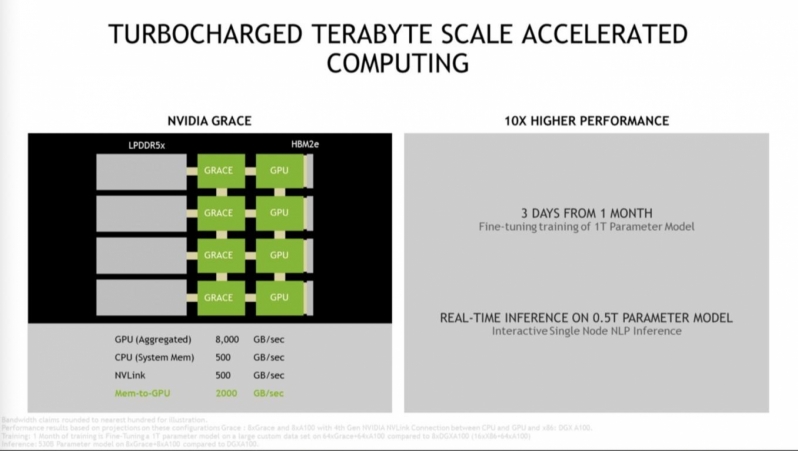

Nvidia wants to accelerate its workloads and allow their processors to utilise larger datasets, and that’s where Nvidia’s Grace processors come into play. Through Grace, Nvidia will utilise NVLink to enable faster communications between CPU memory and GPU compute clusters, offering more bandwidth to their graphics processors and increased throughput.Â

By creating CPUs and GPUs in tandem, Nvidia can accelerate their workloads to ludicrous degrees, which is good news for their customers.Â

Nvidia’s Grace processors will utilise LPDDR5x memory and NVLink. When compared to their x86-based GDX systems, Memory-to-GPU communications can be accelerated from 64GB/s to 2,000GB/s. That’s a 31.25x increase in bandwidth, though it is worth noting that Nvidia’s chart below uses four Grace processors while their x86 chart only uses one. Â

This level of improvement is possible because Nvidia designed their Grace processor specifically for their workloads, making it practically impossible for Intel or AMD to beat Nvidia in these use cases.Â

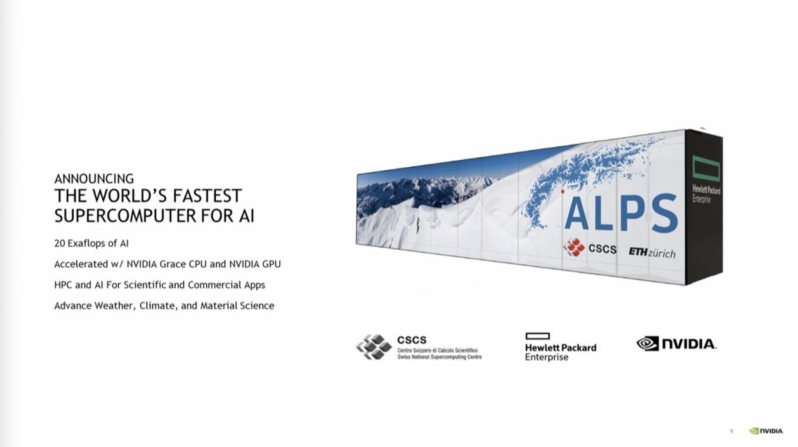

Nvidia has confirmed that the Swiss National Computing Center and the Los Alamos Laboratory are planning to use Nvidia’s Grace processors within their next-generation supercomputers.Â

The Swiss National Computing Center’s planned ALPS supercomputer will come online in 2023 and offer 20 Exaflops of AI compute performance. This supercomputer will use Nvidia’s Grace CPUs and next-generation Nvidia GPUs.Â

You can join the discussion on Nvidia’s Grace CPU on the OC3D Forums.Â