AMD launches ROCm 6.1, enabling multi-GPU support and other key upgrades

AMD gives developers more choice with ROCm 6.1

AMD has unveiled the latest release of its open software, AMD ROCm 6.1.3. This marks the next step in its strategy to make ROCm software broadly available across AMD’s GPU portfolio. This includes AMD Radeon desktop GPUs. The new release gives developers broader support for Radeon GPUs to run ROCm AI workloads.

“The new AMD ROCm release extends functional parity from data center to desktops. Enabling AI research and development on readily available and accessible platforms,” said Andrej Zdravkovic, senior vice president at AMD.

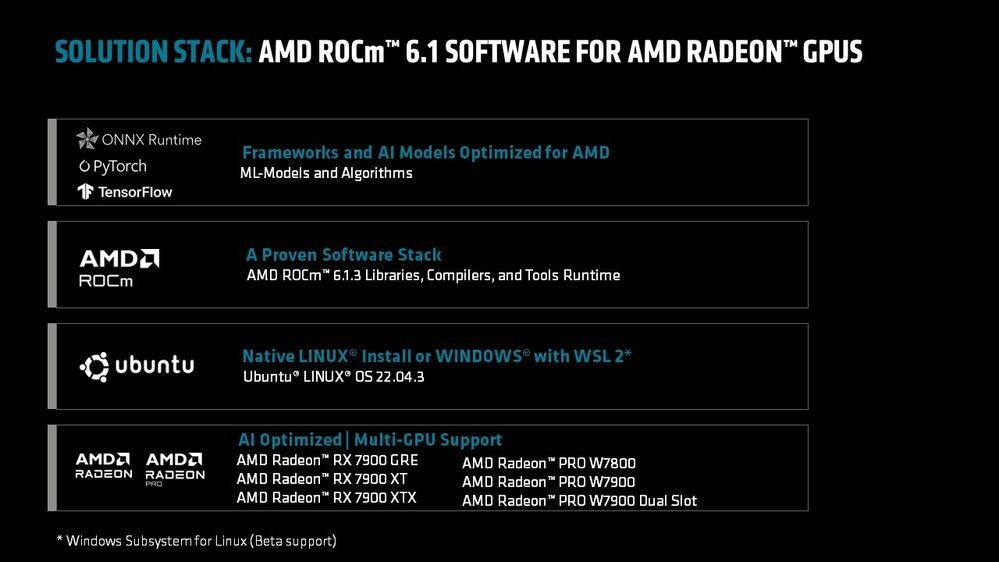

Key feature enhancements in this release focus on improving compatibility, accessibility, and scalability, and include:

- Multi-GPU support to enable building scalable AI desktops for multi-serving, multi-user solutions.

- Beta-level support for Windows Subsystem for Linux, allowing these solutions to work with ROCm on a Windows OS-based system.

- TensorFlow Framework support offering more choice for AI development.

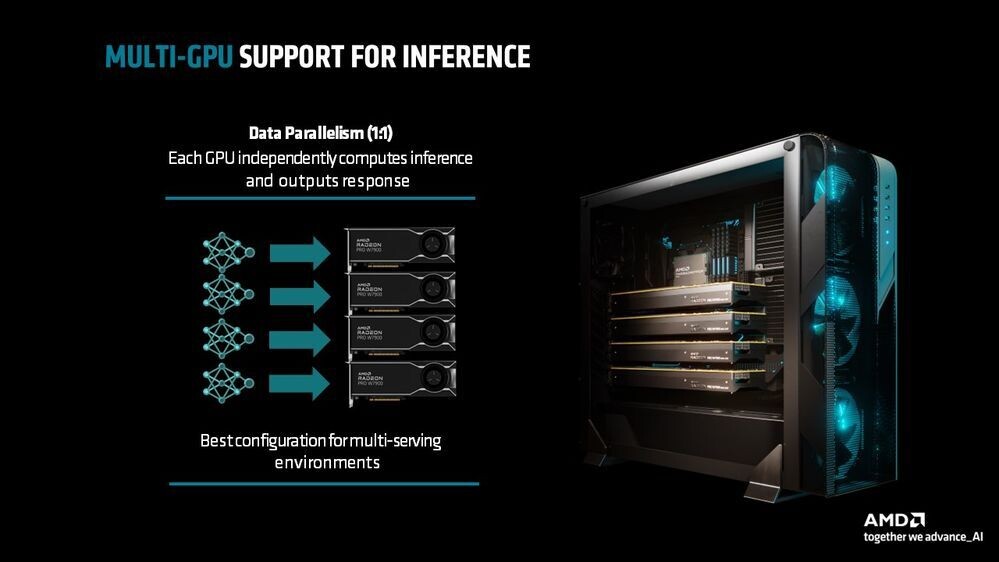

ROCm 6.1 now supports multi-GPU configurations

ROCm 6.1.3 now supports up to four qualified Radeon RX Series or Radeon PRO GPUs. This allows users to leverage configurations with data parallelism where each GPU independently computes inference and outputs the response. This enables client-based multi-user configurations powered by AMD ROCm software and Radeon GPUs.

With ROCm 6.1.3, AMD’s making it even easier to develop for AI with Beta support for Windows Subsystem for Linux. This means you can now run Linux-based AI tools on a Windows system. WSL 2 eliminates the need for a dedicated Linux system or a dual-boot setup.

Furthermore, we are announcing qualification of the TensorFlow framework, providing another choice for AI development aside from PyTorch and ONNX which were already supported, extending our robust open ecosystem of frameworks and libraries.

In addition, we are extending our client-based AI development offering with the introduction of the AMD Radeon PRO W7900 Dual Slot card which packs 192 AI accelerators and 48GB of fast GDDR6 memory into a compact form factor for higher, system-level density.

AMD AI workstations equipped with a Radeon PRO W7900 GPU represent a new solution to fine-tune and run inference on large language models (LLMs) with high precision. For example, LLaMA-2 or LLaMA-3 with 70B parameters quantized at INT4 require at least 35GB of local GPU memory, making the Radeon PRO W7900 GPU with 48GB of fast on-board memory the right choice for workflows that make use of these models.

Generative AI for natural language processing (NLP) using such LLMs can help enterprises tailor interaction with customers, assist with development operations (DevOps), and improve the process of managing data and documents.

AMD’s Radeon PRO W7900 Dual-Slot GPU is now shipping

AMD is committed to building a highly scalable and open ecosystem with ROCm software. With the latest release of ROCm 6.1 software for Radeon desktop GPUs, AMD empowers system builders to take full advantage of our enhanced solution stack to create on-prem systems that add powerful AI performance to any IT infrastructure. This makes these tools ideal for mission-critical or low-latency projects – and allowing organizations to keep their sensitive data in-house.

With AMD ROCm 6.1 and the new AMD Radeon PRO W7900 Dual Slot card, which is now shipping, AI on desktops opens new opportunities for developers and enterprises to increase productivity, creativity, and innovation with performance and ease of use.

You can join the discussion on AMD’s latest ROCm updates on the OC3D Forums.