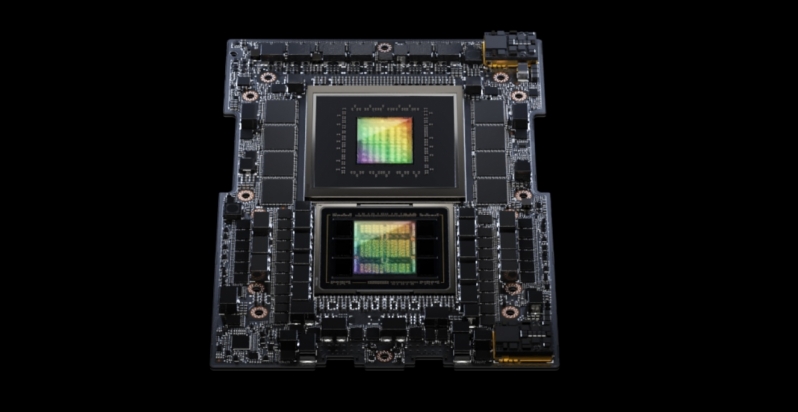

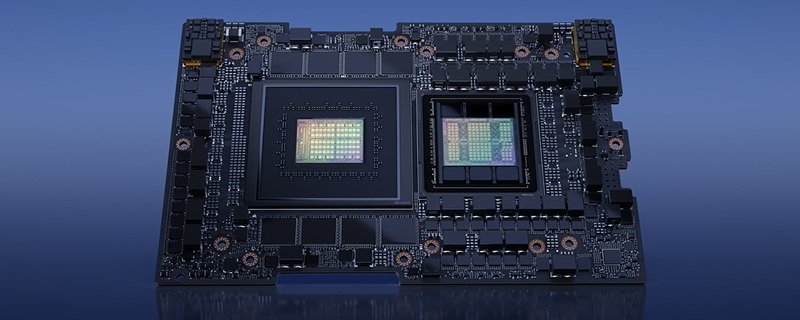

Nvidia next-generation GH200 Grace Hopper Superchip with a HBM3e memory boost

Nvidia launches the world’s first HBM3e product with their next-generation GH200 Grace Hopper Superchip platform

At SIGGRAPH, Nvidia has revealed their next-generation Grace Hopper GH200 Superchip platform, which is the first product to ever utilise HBM3e memory. Thanks to the power of HBM3e memory, Nvidia’s new Superchip products can now feature increase memory bandwidth and higher memory capacities, allowing the company’s latest chips to both work faster than work with larger datasets.

With a dual configuration, Nvidia has stated that their new GH200 Superchip products can now feature up to 3.5x more memory capacity and 3x more memory bandwidth than before. This configuration is a single server that features 144 ARM Neoverse CPU cores, eight PetaFLOPS of AI performance, and 282GB of HBM3e memory.

Nvidia has stated that the HBM3e memory that they are is 50% faster than the HBM3 memory that the company used previously, offering up to 10 TB/s of combined memory bandwidth per system. With a 50% increase in per module memory bandwidth and a new 2x Grace Hopper server configuration, Nvidia are claiming to offer a 3x memory bandwidth boost with their new Grace Hopper Superchip configurations.

Nvidia has confirmed that their new HBM3e powered GH200 systems will be available in Q2 2024 from leading system manufacturers, and have confirmed that their new HBM3e based superchips will be fully compatible with the NVIDIA MGX server specification unveiled at COMPUTEX earlier this year.

If you want to boil down Nvidia’s GH200 Superchip marketing down to its bare essentials, the company has revealed two things today. The first is that Nvidia are now using HBM3e memory chips with their Hopper AI chips, providing users with a 50% memory bandwidth boost and larger memory capacities. The second thing is that Nvidia can now provide two interconnected GH200 Grace Hopper Superchips within a single server, increasing the amount of memory and compute that they can offer within a single server.

Nvidia has not moved on from either their Grace CPU design or their Hopper AI accelerator design, but they have managed to make their products more appealing by increasing memory bandwidth and memory capacities with HBM3e, and they have increased the compute density of Grace Hopper Superchip servers with their new 2x server configuration options. Those are big innovations, even if Nvidia are using the same Grace and Hopper silicon as before.

You can join the discussion on Nvidia’s HBM3E-powered Grace Hopper GH200 Superchips on the OC3D Forums.