PCIe-attached memory delivers huge GPU boost with Panmnesia CXL tech

Will GPU makers adopt support for CXL for GPU memory expansion?

One of the biggest problems with modern AI/HPC GPUs is that they have a finite amount of memory. Yes, manufacturers like AMD, Intel, and Nvidia are placing as much HBM memory as they can into their GPUs and accelerators, but workloads are expanding and customers are demanding more. This is where Panmnesia steps in with their CXL memory expansion technology.

Using their optimised CXL IP, Panmnesia can add more memory can be added to GPUs and AI accelerators. This memory is connected to GPUs/accelerators over the PCIe interface, adding off-GPU storage to their memory subsystems. This can increase the performance of GPUs when done correctly, but traditional solutions using “Unified Virtual Memory (UWM) is slow and has a large latency penalty.

Panmenesia, with their custom IP, have created a solution that allows for GPU storage expansion without the penalties of UWM. Furthermore, their optimised solution enables solutions with round-trip latencies in the 2-diget nanosecond range. That’s a lot faster than the hundreds of nanoseconds range of other prototype solutions.

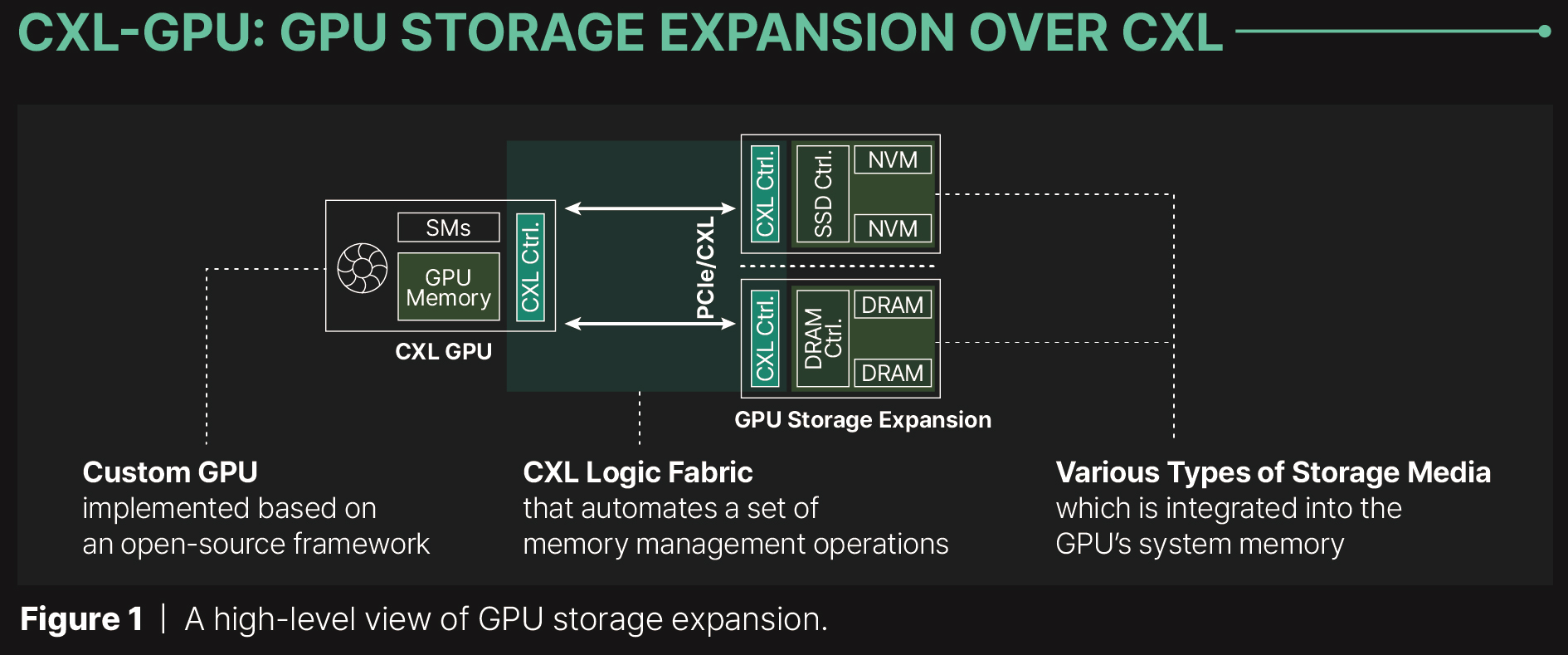

(Giving GPUs more memory with CXL)

Panmnesia delivers a huge performance boost with their CXL tech

Panmenesia’s solution (market at CXL-Opt below), uses an on-device controller that allows custom GPUs to deal with CXL memory in an optimal way. The system connects to PCIe-connected DRAM or SSD storage using CXL. This can be used to add DDR5 memory or NAND storage to the memory pools of GPUs and accelerators.

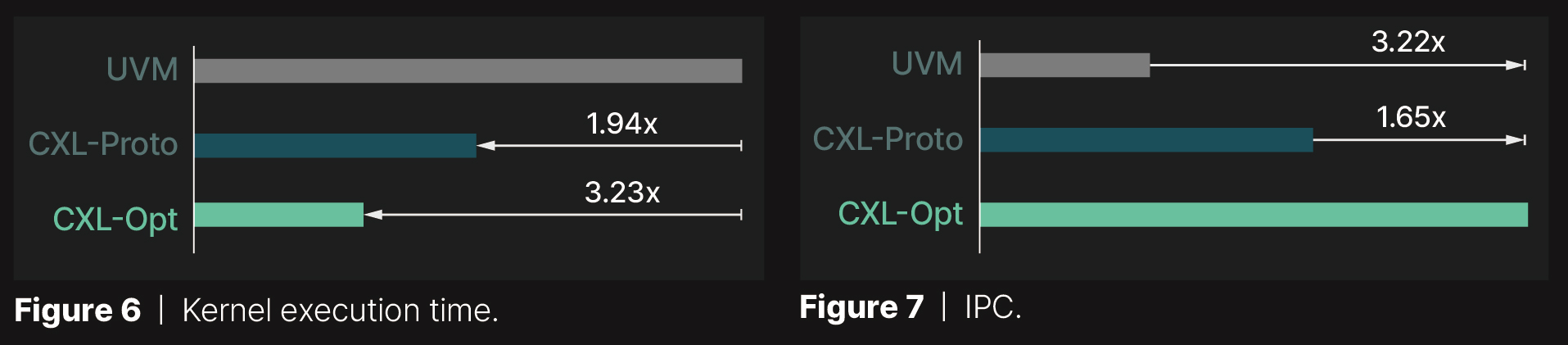

Below, we can see the benefits of Panmenesia’s CXL-Opt solution when compared to UWM. Note that CXL-Proto represents the performance of other prototype solutions, like those made by Meta or Samsung. As mentioned before, these prototypes have huge latency penalties that limit the potential performance gains that increased memory access can deliver.

(The benefits of CXL memory with UVM Kernel execution)

Support for CXL memory expansion has the potential to dramatically increase the performance of existing GPUs and AI accelerators. That said, it remains to be seen if companies like AMD, Nvidia, or Intel will officially support it. Will they adopt Panmnesia’s solution, or create their own technology to address this problem?

Added on-card memory capacity is a huge selling point for any new HPC GPU or AI Accelerator. With this in mind, official support for fast off-device memory may have its downsides for GPU manufacturers. Even so, it is clear that there is a demand for more memory on these devices; and someone needs to address that.

You can join the discussion on Panmnesia’s CXL memory tech on the OC3D Forums.