Micron starts mass producing its HBM3e memory technology

Micron prepares to power the next-generation of AI accelerators with their HBM3E memory

Micron has today announced that their HBM3E memory has entered volume production. With this new memory, Micron are promising to deliver faster speeds and greater power efficiency. Micron’s HBM3E memory will deliver more than 1.2 TB/s of bandwidth. That’s more bandwidth than an Nvidia RTX 4090 on a single chip!

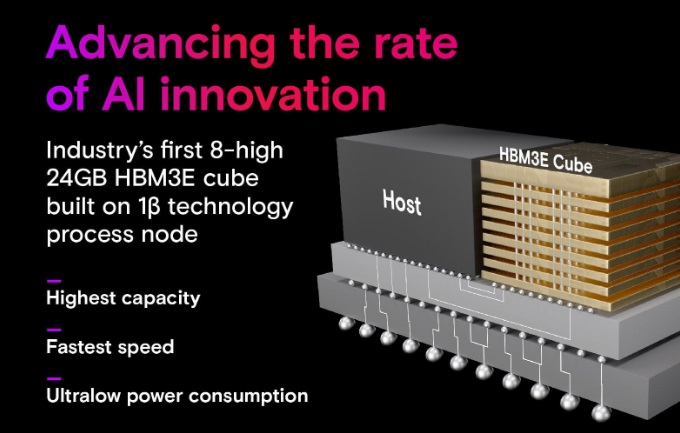

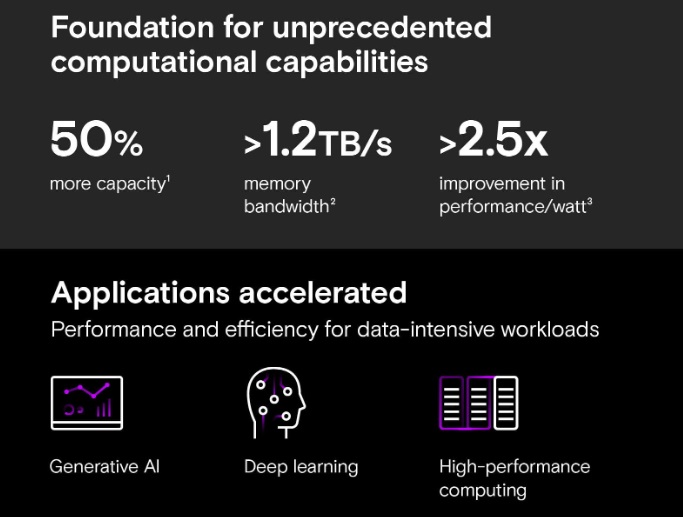

Today, Micron are producing 24GB HBM3e memory chips. 36GB chips are now being sampled to customers. With HBM3E, manufacturers can achieve higher storage densities and higher levels of bandwidth than today’s HBM3 chips.

AI workloads are incredibly bandwidth reliant. That’s why AMD has teased upgraded HBM3E versions of their Instinct MI300 AI accelerators. The bandwidth offered by HBM3E will be critical for the next generation of AI accelerators. Micron wants to be a key memory provider for these future products. Nvidia has also revealed plans to use HBM3E memory as part of their future products.

With memory performance being critical for AI workloads, companies like Micron have a lot to gain from the AI market. Producing the fastest memory in high volumes will allow Micron to benefit from the surge in demand for AI products. As such, we can expect Micron to push themselves to create faster and HBM3/HBM3E modules. We can also expect Micron to develop future memory types (HBM4?) on an accelerated timeframe.

Press Release – Micron Commences Volume Production of Industry-Leading HBM3E Solution to Accelerate the Growth of AI

Micron Technology, a global leader in memory and storage solutions, today announced it has begun volume production of its HBM3E (High Bandwidth Memory 3E) solution. Micron’s 24GB 8H HBM3E will be part of NVIDIA H200 Tensor Core GPUs, which will begin shipping in the second calendar quarter of 2024. This milestone positions Micron at the forefront of the industry, empowering artificial intelligence (AI) solutions with HBM3E’s industry-leading performance and energy efficiency.

HBM3E: Fueling the AI Revolution

As the demand for AI continues to surge, the need for memory solutions to keep pace with expanded workloads is critical. Micron’s HBM3E solution addresses this challenge head-on with:

- Superior Performance: With pin speed greater than 9.2 gigabits per second (Gb/s), Micron’s HBM3E delivers more than 1.2 terabytes per second (TB/s) of memory bandwidth, enabling lightning-fast data access for AI accelerators, supercomputers, and data centers.

- Exceptional Efficiency: Micron’s HBM3E leads the industry with ~30%Â lower power consumption compared to competitive offerings. To support increasing demand and usage of AI, HBM3E offers maximum throughput with the lowest levels of power consumption to improve important data center operational expense metrics.

- Seamless Scalability: With 24 GB of capacity today, Micron’s HBM3E allows data centers to seamlessly scale their AI applications. Whether for training massive neural networks or accelerating inferencing tasks, Micron’s solution provides the necessary memory bandwidth.

“Micron is delivering a trifecta with this HBM3E milestone: time-to-market leadership, best-in-class industry performance, and a differentiated power efficiency profile,” said Sumit Sadana, executive vice president and chief business officer at Micron Technology. “AI workloads are heavily reliant on memory bandwidth and capacity, and Micron is very well-positioned to support the significant AI growth ahead through our industry-leading HBM3E and HBM4 roadmap, as well as our full portfolio of DRAM and NAND solutions for AI applications.”

Micron developed this industry-leading HBM3E design using its 1-beta technology, advanced through-silicon via (TSV), and other innovations that enable a differentiated packaging solution. Micron, a proven leader in memory for 2.5D/3D-stacking and advanced packaging technologies, is proud to be a partner in TSMC’s 3DFabric Alliance and to help shape the future of semiconductor and system innovations.

You can join the discussion on Micron’s HBM3e memory entering volume production on the OC3D Forums.