AMD’s teasing HBM3E upgrade plans for their Instinct MI300 Accelerator Lineup

AMD’s preparing to give their strongest AI chips an HBM3+ memory upgrade

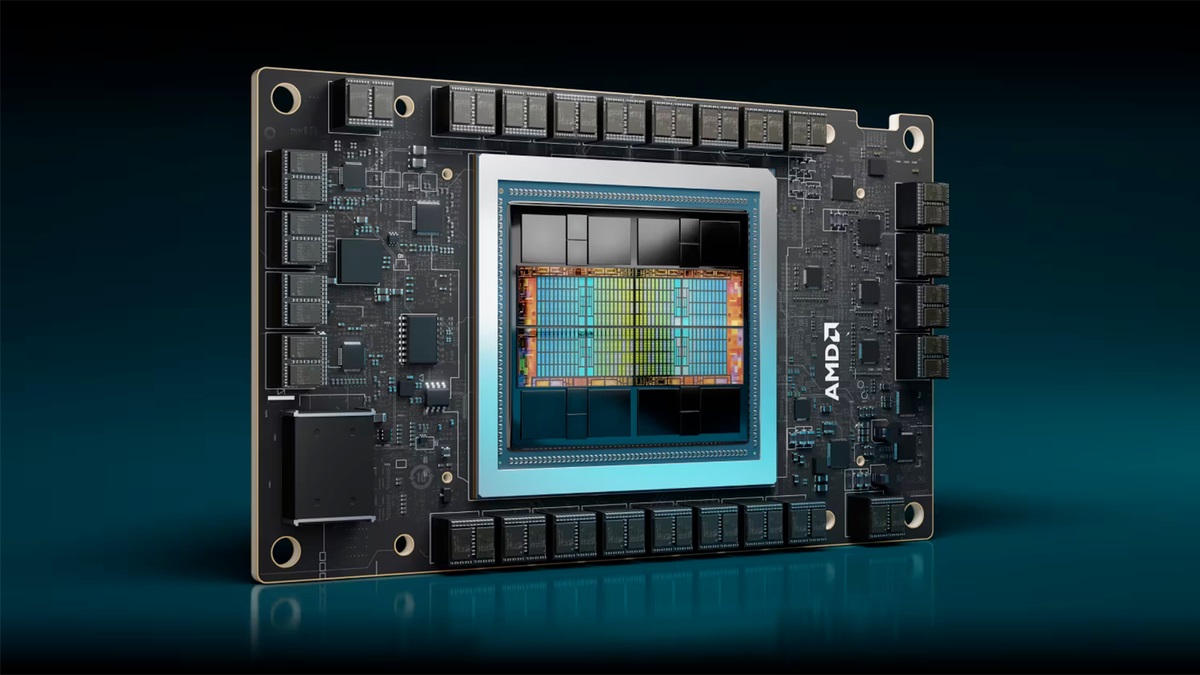

One of the most important aspects of AI accelerators is their memory configuration. Memory bandwidth and capacity are vital for AI workloads, and it looks like AMD are prepping to give their Instinct MI300 accelerators an HBM3E upgrade.

When speaking with Brett Simpson, at an Arete Investor Webinar (as transcribed by Seeking Alpha), AMD’s CTO Mark Papermaster discussed AMD’s plans for the AI market. There, Papermaster teased new memory configurations for AMD’s Instinct MI300 series of AI accelerators. Based on his statements, it looks like new HBM3E based MI300 models are planned.

Nvidia has already revealed their plans to launch new HBM3E based AI accelerators. Now it looks like AMD has similar plans. With HBM3E, AMD can give their Instinct MI300 series accelerators a significant performance boost. As mentioned before, memory performance is vital for AI workloads, and HBM3E memory modules can be 25-50% faster than HBM3 modules.

And we worked extremely closely with all three memory vendors. So that is why we led with MI300, and we decided to invest more in the HBM complex. So we have a higher bandwidth.

And that is fundamental along with the CDNA, which is our name of our IP, that’s our GPU computation IP for AI, along with that, it was HBM know-how that allowed us to establish our leadership position in AI inferencing.

And with that, we architected for the future. So we have 8-high stacks. We architected for 12-high stacks. We are shipping with MI300 HBM3. We’ve architected for HBM3E. So we understand memory. We have the relationship and we have the architectural know-how to really stay on top of the capabilities needed. And because of that deep history that we have not only with the memory vendors, but also with TSMC and the rest of the substrate supplier and OSAT community we’ve been focused as well on delivery and supply chain.

– Mark Papermaster – AMD CTO

AMD’s Instinct MI300X features 192GB of HBM3 memory. This AI accelerator uses eight 24GB HBM3 modules. Recently, Micron has confirmed that they are sampling 36GB HBM3E modules. With these HBM3E modules, AMD could create a 288GB HBM3e based Instinct MI300 accelerator.

AI accelerators have become a major earner for chipmarkers. With Nvidia chips being in short supply, AMD is poised to generate a lot of money from their Instinct AI accelerators as companies search for an alternative hardware provider. With HBM3E upgraded accelerators, AMD will be more competitive than ever within the market.

You can join the discussion on AMD’s HBM3e upgrade plans for their MI300 AI accelerators on the OC3D Forums.