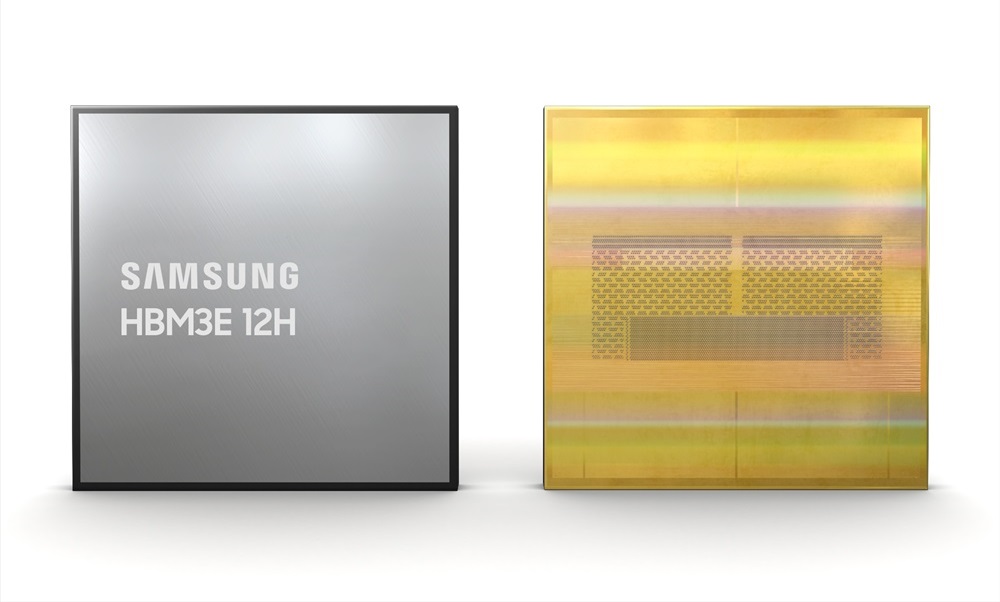

Samsung reveals its first 36GB 12-layer HBM3E memory modules

Samsung delivers 50% speed and capacity boosts with their newest HBM3E modules

Samsung has successfully created their fastest and most memory dense HBM modules to date. Today, Samsung has revealed their 36GB 12-layer HBM3E memory modules, promising users both a performance and capacity boost of 50% over the company’s 24GB HBM3 modules.

With demand for AI hardware exploding, memory manufacturers are working hard to produce the fastest, and most memory dense HBM modules. AI workloads are very memory sensitive, allowing memory speeds to have a huge impact on the performance of AI accelerators. Nvidia has already confirmed their plans to use HBM3E memory modules, and AMD has teased a similar move.

While Samsung’s announcement states that they have created the “the industry’s first 12-stack HBM3E DRAM and the highest-capacity HBM product to date”, this isn’t entirely true. Micron revealed their 12-layer 36GB HBM3E modules yesterday. That said, both manufacturers are only sampling their 36GB modules to customers at this time.

Samsung plans to start mass producing its 12-layer HBM3E memory modules in the first half of 2024.

Based on Samsung’s simulations, their new HBM3E memory modules can increase the average speed of AI training by 34%. This is compared to today’s HBM3 memory modules. AMD’s Instinct MI300X features 192GB of HBM3 memory. With a Samsung HBM3E memory upgrade, AMD could release a new MI300 series accelerator with 288GB of faster HBM3E memory. This change would boost the performance of AMD’s AI accelerator and allow users to make use of larger datasets.

You can join the discussion on Samsung’s 36GB HBM3E modules on the OC3D Forums.