Nvidia RTX 2080 and RTX 2080 Ti Preview

GDDR6

Long before Turing was announced, we knew that the graphics card would make use of GDDR6 memory. Today’s gaming workloads are becoming increasingly bandwidth-starved, especially as gamers reach for higher resolutions and faster refresh rates, creating the need for additional memory bandwidth.Â

In recent hardware generations, manufacturers have looked to solutions like HBM and GDDR5X to supply the memory bandwidth required for modern games, with both solutions having problems. First off, HBM (and HBM2) are difficult to utilise, attaching silicon directly to a GPU die using interposers of other complex structures, making HBM-powered graphics cards difficult to produce. GDDR5X, on the other hand, works a lot like GDDR5, though the bandwidth boost offered by the technology is minimal when compared to GDDR6, with most GDDR5X graphics cards using 10Gbps memory, a relatively small increase over the commonly used 8Gbps GDDR5 that is used by other products. Â

With Turing, Nvidia has moved to GDDR6, offering higher levels of power efficiency than GDDR5 while also providing a substantial performance uplift. The 14Gbps memory that is used with Nvidia’s Turing RTX series graphics cards offers a 40% uplift over the GDDR5X used with the GTX 1080, and a 75% improvement over the 8Gbps GDDR5 memory used on the GTX 1070.Â

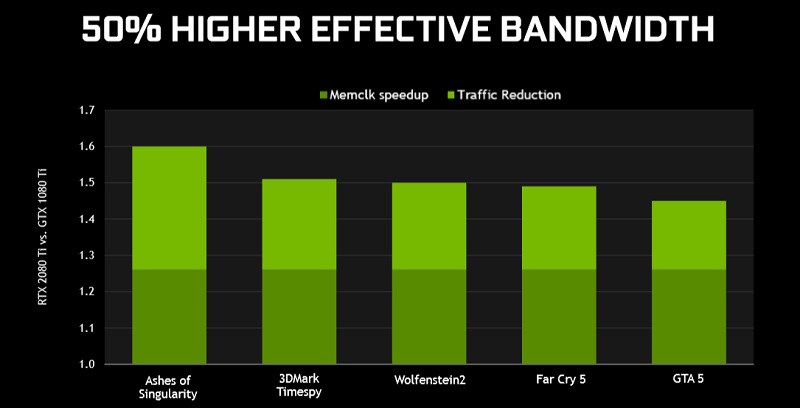

On top of Turing’s use of GDDR6 memory, Nvidia has also brought some improved bandwidth-saving measures to their Turing graphics architecture, allowing their RTX 2080 Ti to offer a 50% boost in memory bandwidth over its predecessor, the GTX 1080 Ti. Â

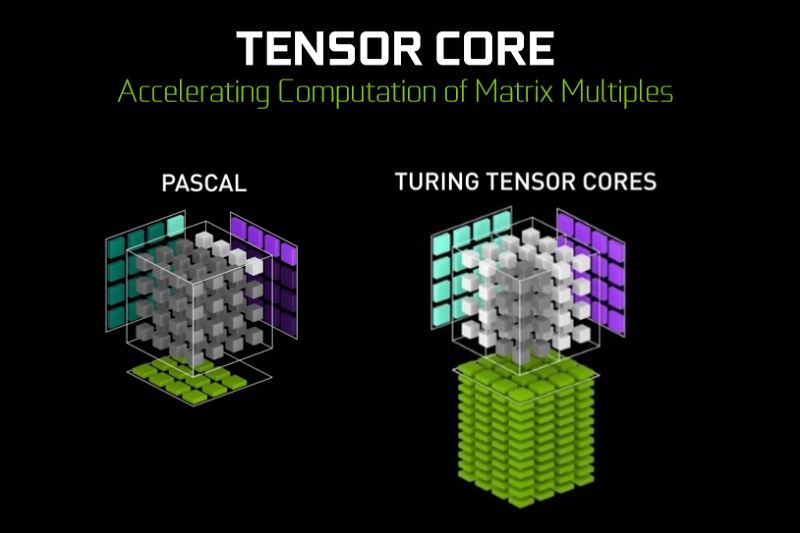

Nvidia’s Tensor cores are designed to accelerate the calculation of matrix multiples, maths that is commonly used by deep learning algorithms and other AI-focused compute scenarios.Â

Some of you will be wondering why Nvidia has decided to bring this enterprise-grade feature into the gaming space, but the answer is simple. What can you do with AI and Deep Learning? Almost anything!

Right now, Nvidia uses their Tensor cores for Deep Learning Super Sampling (DLSS) in games, which allows Nvidia to offer similar levels of image quality as a native resolution presentation with TAA while delivering a significant performance uplift. This gives DLSS users performance uplift that is estimated to be in the region of 35-40%, acting as a kind of “free performance upgrade” for games that support the Deep Learning algorithm.Â

Nvidia has stated that they plan to create other technologies that can utilise their Tensor cores.Â

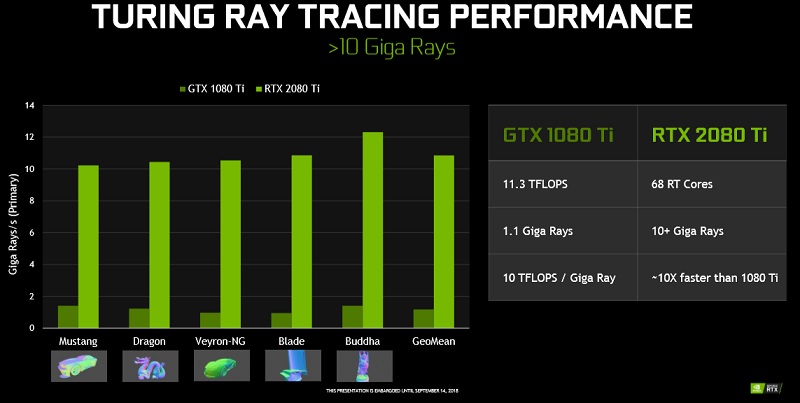

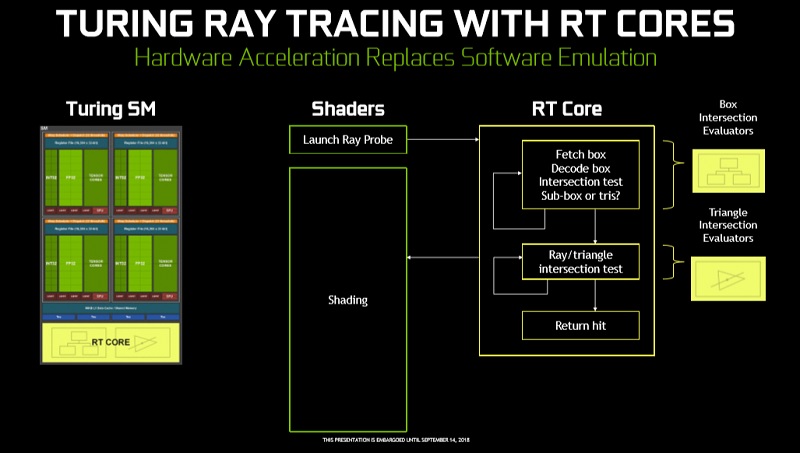

Nvidia’s RT (Ray Tracing) cores are perhaps the most heavily advertised portion of Nvidia’s Turing architecture, acting as the industry’s first form of Ray Tracing Hardware acceleration, at least in the GPU market.Â

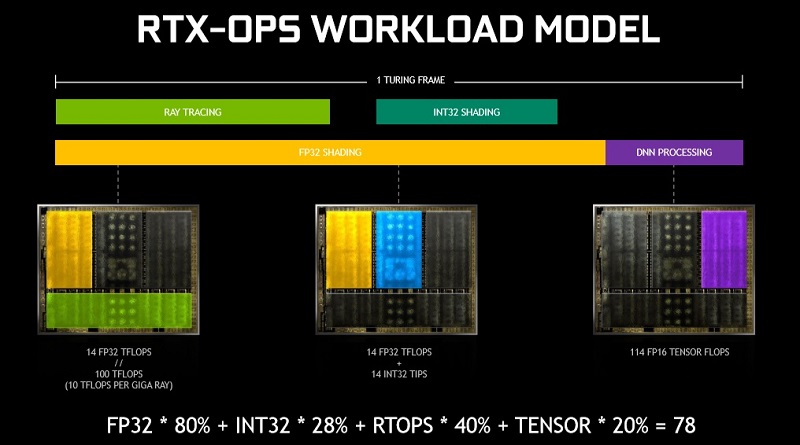

In the slide below, imagine if both the shading and RT Core workflows were in a single line, creating a long list of work for any graphics processor. The work of Nvidia’s RT cores is twofold. First, they add additional parallelisation to Turing’s workflow, allowing other shading calculations to be conducted concurrently, and secondly, they accelerate the Ray Tracing workload directly to complete the task at a faster rate.Â

Â Â

Â

When compared to Nvidia’s GTX 1080 Ti, the RTX 2080 Ti is said to be roughly 10 times faster in Ray Tracing workloads, with the ability to create 10 Giga Rays per second.Â

This kind of performance is transformative for the world of Real-Time Ray Tracing, making the task feasible within the gaming market for the first time.Â

As transformative as Nvidia’s RT cores are, they do not offer the performance that is required to fully Ray Trace games, but they allow us to conduct a form of hybrid rendering. This enables developers to merge traditional rasterisation and Ray Tracing to be to deliver higher levels of graphical fidelity than ever before, while also completing the process in real-time with Turing hardware.Â

This mark’s the graphics industry’s first baby steps into the world of real-time Ray Tracing, something which will become increasingly relevant in the years.Â

When everything comes together, Nvidia’s concurrent workflow system will allow more computational work to be completed than ever before, further parallelising GPU workflow.Â

Soon, Nvidia’s Tensor cores will be used to increase the clarity of games with DLSS, reducing the computational power required to render high-resolution images, offering the industry’s first AI-made performance boost. With Deep Learning, Nvidia hopes to fake high-resolution images convincingly enough that gamers will never notice the difference, something which we will test at a later date. Â

With Turing, Nvidia has packed more computational power into a single graphics card than ever before, while also diversifying the compute infrastructure of graphics cards to enable new features, forging a path into the realms of Deep Learning and real-time Ray Tracing.Â